PromptPro

Problem Statement

Most people write terrible prompts. Not because they're bad at thinking — because prompting is a new skill and nobody has muscle memory for it yet. You type something vague into ChatGPT, get a mediocre response, and blame the model. But 80% of the time, the prompt was the problem.

Hypothesis

What if prompt improvement happened inline, right where you're already typing? No separate tool, no copy-pasting into a "prompt optimizer" — just highlight your text, click a button, and get a better version. Like Grammarly, but for talking to AI.

Solution

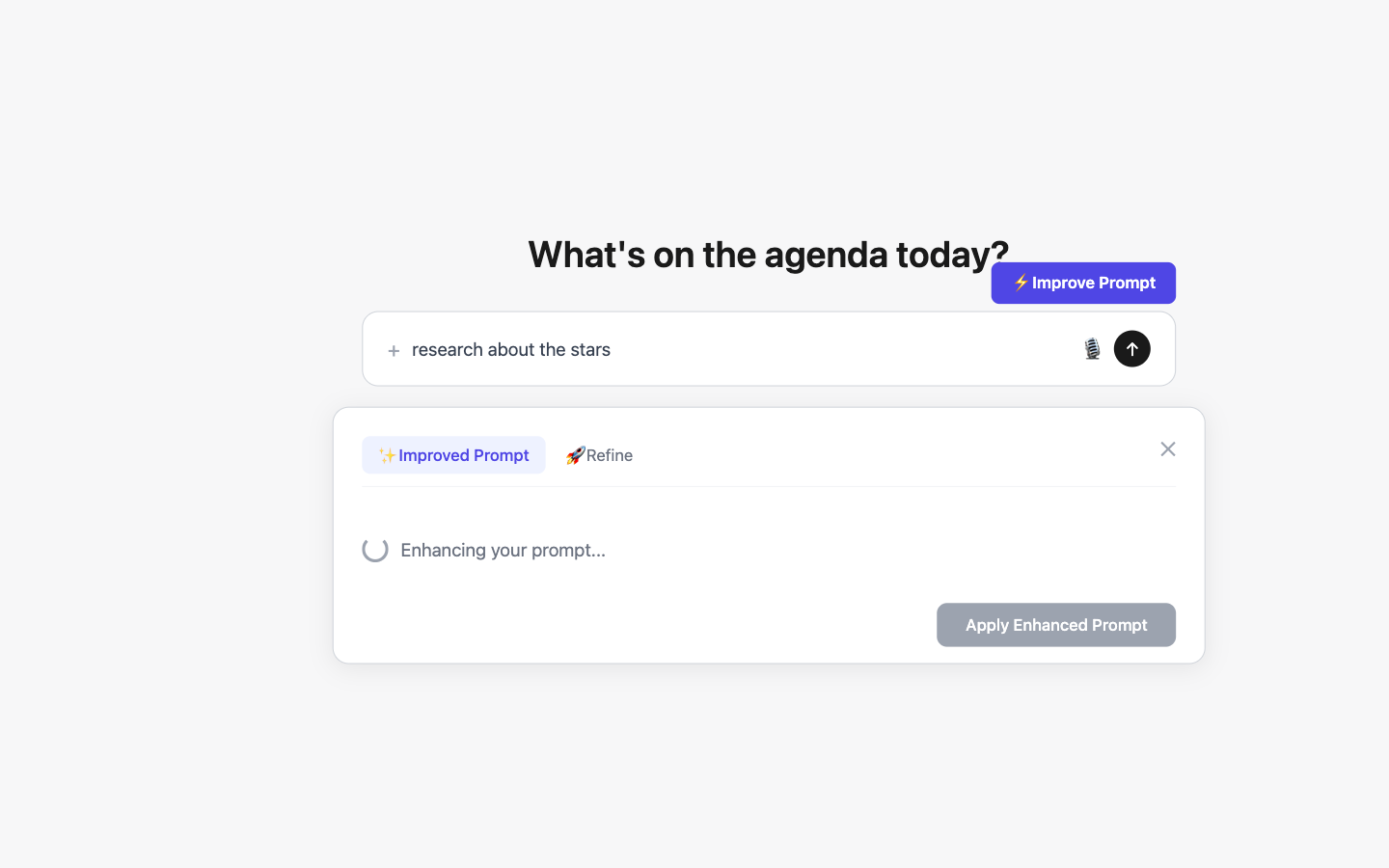

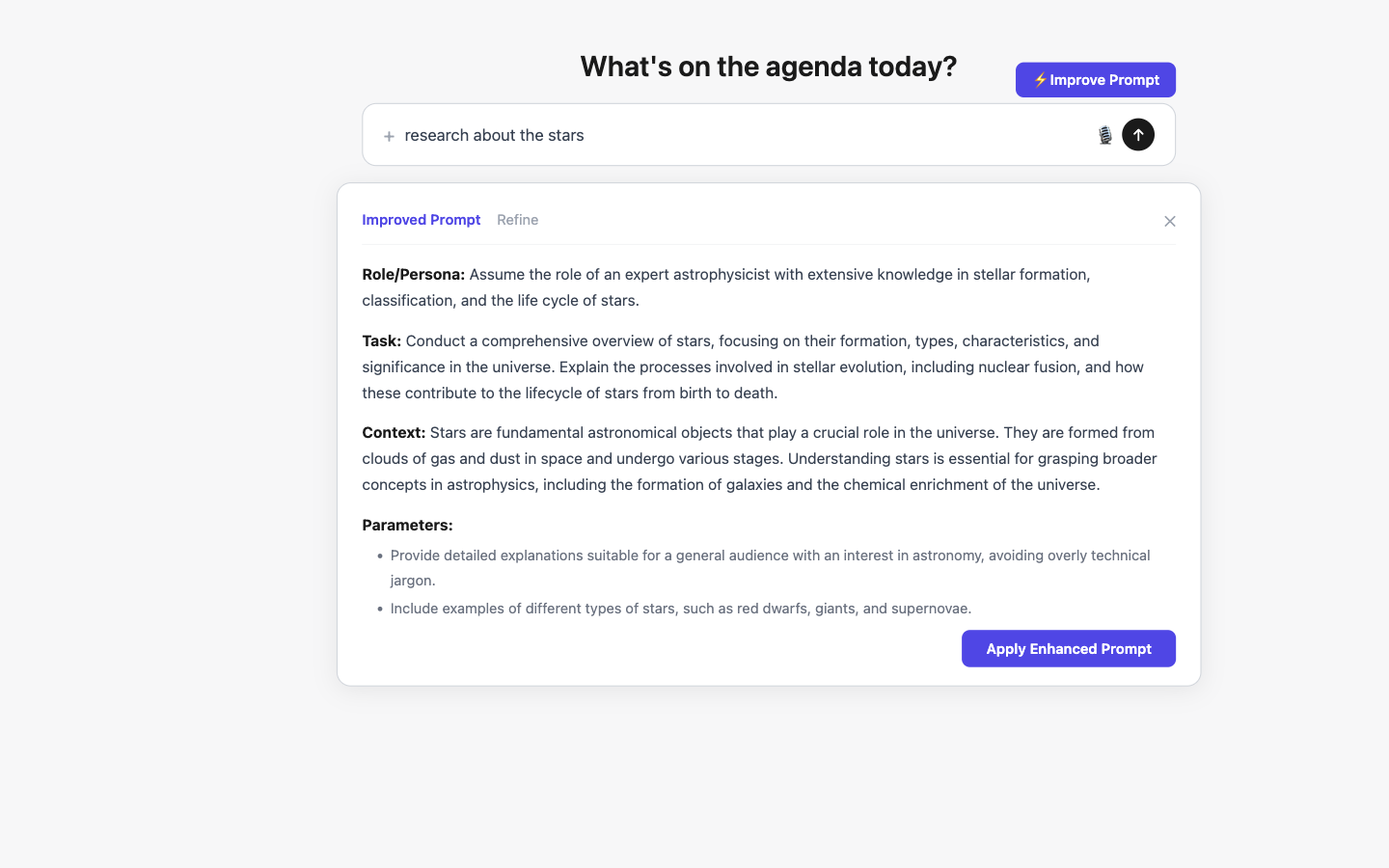

PromptPro is a Chrome extension that injects directly into ChatGPT and Microsoft Copilot, adding an "Improve" button next to the input box:

- Inline enhancement — select text or place cursor in any supported input, click Improve, get a restructured prompt with better clarity, structure, and specificity

- Persona-based refinement — optionally refine with a persona (Teacher, Executive, Technical Reviewer) to shape the tone and depth

- One-click apply — the enhanced prompt replaces your original text directly in the input box. Hit enter and go

- BYOK (Bring Your Own Key) — runs on your personal OpenAI API key, stored in Chrome sync storage. Never leaves your browser

- Zero tracking — no analytics, no telemetry, no prompt logging. Your prompts stay yours

Screenshots

Technical Architecture

Key in chrome.storage.sync. Background worker mediates all network calls. Optional FastAPI + MongoDB backend scaffolded for future prompt history.

Key Product Decisions

- Inject, don't redirect. The extension lives inside ChatGPT and Copilot, not in a separate tab. Users shouldn't context-switch to improve their prompts — it should happen where they already are

- Enhancement, not generation. PromptPro improves what you wrote, it doesn't write for you. This preserves intent while adding structure — which is what most prompts actually need

- Personas are optional, not default. Most users just want "make this clearer." Power users get persona modes. Layering complexity rather than frontloading it

- Backend exists but sleeps. The FastAPI service is scaffolded for prompt history and team features, but v1 is fully client-side. Ship the simplest thing that works, then layer on

Impact & Metrics

Lessons Learned

- The best AI tools are invisible. Nobody wants to learn a new interface to use AI better. Embedding the enhancement directly into the tools people already use eliminates adoption friction entirely

- Prompt quality is a UX problem, not an AI problem. The models are capable enough — the bottleneck is the human-to-model interface. Improving that interface is where the leverage is

- MV3 is painful but worth it. Chrome's Manifest V3 restrictions (no persistent background pages, limited APIs) forced cleaner architecture. The service worker pattern is actually better for this use case